Current Ph.D Projects

XXXVI Cycles: 2020 – 2023

Ph.D Candidate:

Emir Mobedi

Advisors:

Elena De Momi, Arash Ajoudani

Ph.D cycle:

XXXVI

Collaborations:

IIT

Design and Development of Assistive Robotic Technologies for Industrial Applications

In this project, I will propose a novel one DoF underactuated exoskeleton device to allow the human workers achieve their tasks in an ergonomic way. Such a device can then provide assistance for the internal (resulting from the human body) and external (task-related payload) loading. Moreover, the controller frameworks also will be developed to include the aforementioned exoskeletons into the HRC frameworks. Consequently, robot responses will be formed to assist the worker to perform the intended manipulation task in configurations in which the effect of external stimuli on human factors are minimum. An experimental evaluation of the proposed framework will be carried out at HRI2 lab. The outcome of my PhD studies will drive radical improvements in adaptability and time-efficient reconfigurability of the HRC systems to the worker physical states and tasks, and will contribute to the reduction of musculoskeletal disorders (MSDs).

Ph.D Candidate:

Francesco Iodice

Advisors:

Elena De Momi, Arash Ajoudani

Ph.D cycle:

XXXVI

Collaborations:

IIT

Advance Human-Robot Interaction and Collaboration

The collaboration between man and robot is one of the fundamental aspects of Industry 4.0. In this regard, the objective of this theme is to create advanced predictive control schemes that, integrated with computer vision techniques for detection and tracking, and algorithms for mapping and localization of the robot in the environment, will allow the real-time adaptation of collaborative robots to dynamic factors and human intentions. This flexibility will minimize the risk of injury to workers. The objective is to extract leader-follower roles and dynamic features such as configuration-dependent stiffness (CDS) from videos of humans performing collaborative tasks (here, a two-person wood sawing), and replicating them in a dual-arm robotic setup. We created a dataset that is not dispersive in its classes but sectoral, i.e., dedicated exclusively to the industrial environment and human-robot collaboration. Specifically, we described our ongoing collection of the ’HRI30’ database for industrial action recognition from videos, containing 30 categories of industrial-like actions and 2940 manually annotated clips. The goal was to propose vision techniques for human action recognition and image classification, integrated into an augmented hierarchical quadratic programming (AHQP) scheme to hierarchically optimize the robot’s reactive behavior and human ergonomics. This framework allows the robot to be intuitively commanded in space while performing a task.

Ph.D Candidate:

Fabio Fusaro

Advisors:

Elena De Momi, Arash Ajoudani

Ph.D cycle:

XXXVI

Collaborations:

IIT

Robotic Human-Aware Task Planning And Allocation For Human Robot Collaboration

The raising awareness of worker ergonomics and the flexibility requirements of the modern enterprises have called for a radical change in manufacturing processes. Thereupon, companies started to focus on the design of new production lines, rather than on corrective ex post interventions, which were much more expensive and not always effective. Several new tools such as collaborative robots (cobots) and wearable sensors and displays (e.g., augmented reality) were introduced, with the aim to provide better working conditions for human labour, while keeping high levels of productivity, flexibility, and cost-efficiency in manufacturing processes.

Cobots, in particular, have demonstrated the potential of pushing small-medium enterprises towards highly adaptive and flexible production paradigms. The idea is to exploit robots capabilities to plan and allocate tasks in industrial environments to enhance the efficiency of the production lines not only from an enterprise point of view, e.g. reducing cycle times, costs, but also to improve worker conditions and safety, e.g. reducing workload, improving ergonomics.

Ph.D Candidate:

Francesco Tassi

Advisors:

Elena De Momi, Arash Ajoudani

Ph.D cycle:

XXXVI

Collaborations

IIT

Augmented Hierarchical Quadratic Programming based control algorithm for improved human-robot collaboration.

The number of collaborative robots (Cobots) in human-populated environments has increased exponentially due to their human-comforting properties. Thanks to strength, rigidity, and precision, Cobots have led to achieve significant developments in Human-Robot Collaboration (HRC), allowing for greater profitability and redistribution of work, thus reducing Musculoskeletal Disorders (MSDs). The objective is to improve the quality of the human-robot collaboration, by increasing robots’ flexibility and robustness to real-word scenarios. Providing help in repetitive tasks (e.g., pushing, pulling, lifting) and in carrying heavy loads, are indeed only some of the possible features that can help to improve workers ergonomics and reduce MSDs. This collaboration, however, poses multiple open challenges. For example, it is essential for a Cobot to perceive the intentions of the human and distinguish between a collaborative or non-collaborative behaviour. When collaborating, the robot must recognize human actions and understand intentions since the operator might interact using multiple tools and objects that the robot should recognize to facilitate the current task. In addition, HRC is usually conceived within a shared workspace that is fixed in the work environment (e.g., on a workbench), whereas it might be of interest to allow the operator to move the shared workspace while continuing with the task (e.g., reach the next workstation while interacting, reducing cycle times). This not only puts the human in charge of the operation, accommodating movements and intentions during the manufacturing phase, but it also reduces cycle times since the task does not get interrupted. We thereby propose a control framework aimed at increasing human ergonomics in highly dynamic and precise tasks. Multiple experiments on the MOCA robot successfully prove the benefits and improvements of the framework. The broad variety of experiments and their wide applicability confirm the potentials for improving human-robot collaboration and reducing MSDs.

Context

The recognition of actions performed by humans and the anticipation of their intentions are important enablers to yield sociable and successful collaboration in human-robot teams. Meanwhile, robots should have the capacity to deal with multiple objectives and constraints, arising either from the collaborative task or from the human. Action recognition alone does not ensure higher performances when it is applied to the robot under real circumstances. Indeed, state-of-the-art methods solve this problem independently from robot control.

Objectives

The goal is to provide a synergetic framework that fluently integrates action recognition at the control level. Therefore, an appropriate control logic should be capable of fully exploiting Cobots redundancy. This is possible by defining multiple priority levels of a stack of tasks through Hierarchical Quadratic Programming (HQP), which solves multiple Quadratic Programming (QP) problems, establishing strict non-conflicting priorities.

Contributions

An advanced hierarchical robot controller for optimal HRC has first been created. Secondly, a human ergonomics score has been mapped in the Cartesian workspace. Eventually, the integration of the ergonomics map in the hierarchical controller helped to reduce human fatigue while performing common collaborative tasks.

Successively, vision techniques were employed to perform human action recognition and image classification, which were then integrated into an Augmented Hierarchical Quadratic Programming (AHQP) scheme to hierarchically optimize the robot’s reactive behaviour and human ergonomics. The proposed framework allows one to intuitively and ergonomically command the robot in space while a task is being executed. The experiments performed confirm increased human ergonomics and usability, which are fundamental parameters for reducing musculoskeletal diseases and increasing trust in automation. The implemented framework considers a moving and continuous interaction point for HRC. All the state-of-the-art studies addressing human ergonomics in HRC either employ fixed robots or consider a precise interaction area (e.g., a workbench for assembly). Here instead, a HQP-based controller can follow the human while optimizing ergonomics. In addition, this method allows to deal with human ergonomics efficiently at control level, by avoiding computationally intensive human models running online, as mostly done in the literature, and not having to rely on noisy or cumbersome acquisitions of all human joints’ coordinates (i.e., via camera or motion capture hardware). These methods often require the online solution of the human dynamics/kinematics, which significantly affects computational times. Indeed, usually an optimal posture is found in human’s joint coordinates, which is then processed by both the motion planner and by the robot’s controller. Using the AHQP-based controller instead, only one step is required, reducing cycle times and, as importantly, hierarchically formulating multiple objectives and constraints to be enforced. Finally, we integrate a new perception module so that human intentions (e.g., collaborative and non-collaborative with respect to the Cobot) and actions (e.g., walking directions, intended tool to be used in the interaction, object to handover) can be detected and anticipated to create reactive robot responses.

Ph.D Candidate:

Ke Fan

Advisors:

Elena De Momi, Giancarlo Ferrigno, Hang su

Ph.D cycle:

XXXVI

Collaborations

/

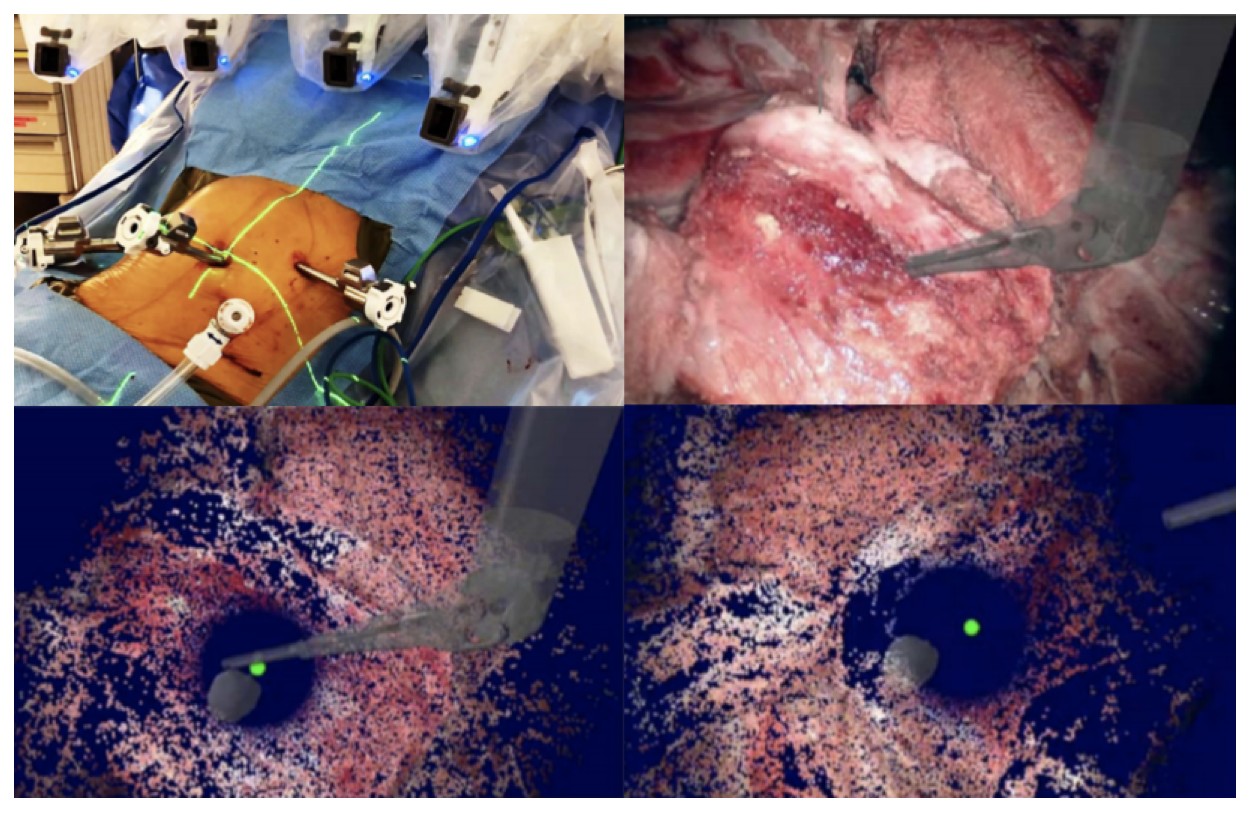

Augmented reality (AR) guidance of the surgeon based on real-time 3D reconstruction of the surgical field

Augmented reality (surfaces reconstruction and feature tracking) can enhance the surgical safety in robotic-based interventions largely. Based on the real-time 3D reconstruction of the surgical area, using dynamic active constraints, wearable devices are used for augmented reality guidance for surgeons and restrict the instrument to a safe area. The demonstration platform is based on the da Vinci surgical system. The laboratory model will be tested with surgeons to make the technology easier to develop and validate the acceptance by clinicians.

Ph.D Candidate:

Junling Fu

Advisors:

Elena De Momi, Hang su

Ph.D cycle:

XXXVI

Collaborations

/

Human-robot skills transfer during robot-assisted minimally invasive surgery

Robot-assisted minimally invasive surgery has great potential for improving the accuracy, dexterity of surgeons while minimizing trauma to the patients. However, surgeons are required to implement delicate and complex operations during RAMIS, including cutting, stitching, knotting. To release the complexity of surgical operations and reduce the workloads of surgeons, human-robot skill transferring is proposed to improve the practicability of surgical robots.

1) Learning the surgical skills from surgeon experts and reproduce these surgical operations is an effective way to enhance the autonomy of the surgical robot in RAMIS. Three stages are included in this process, namely, demonstration, learning, and reproduction.

2) To improve the flexibility of surgical manipulators and achieve human-like control, advanced control algorithms will be implemented, e.g. variable stiffness control. Besides, haptic feedback will be integrated to realize the realistic immersion and enhance safety during RAMIS.

Ph.D Candidate:

Ziyang Chen

Advisors:

Elena De Momi, Giancarlo Ferrigno, Hang Su

Ph.D cycle:

XXXVI

Collaborations:

/

Vision-Driven Automatic Path Planner for Da Vinci surgical robot

For the traditional minimally invasive surgery, the surgeon needs to manually design the surgical path, which is time-consuming and not accurate. This project focuses on the design of a novel automatic path planner based on vision for Da Vinci surgical robot. Only through the real-time acquisition of depth images during the operation, a safe and feasible operation path can be automatically planned to solve the disadvantages of the traditional operation mode. The ultimate goal is to help surgeons complete automatic path planning, and improve the safety, accuracy and real-time characteristics of minimally invasive surgery. This research first analyzes the mechanical structure and kinematics of Da Vinci surgical robot. Then, research makes a detailed theoretical analysis of advanced computer vision algorithms. Considering the time-consuming problem of the deep learning model in motion decision-making training, this research also proposes a new working method of dual model selection to improve the training efficiency.

Ph.D Candidate:

Anna Bucchieri

Advisors:

Elena De Momi, Pietro Cerveri

Ph.D cycle:

XXXVI

Collaborations:

IIT

Novel controls for upper limbs exo-skeletons

Context:

New efficient techniques of rehabilitation are needed to help post-stroke patients regain lost motor functions. Particularly, rehabilitation robotics have proven to be efficient in assisting different sensorimotor functions by allowing high-intensity and highly repeatable exercises.

Purpose:

Following a bottom-up approach, the PhD project proposes to design novel control strategies for upper-limb exoskeletons for the rehabilitation of stroke patients. In particular, the control strategies will provide Assistance-As-Needed and corrective feedback in an Augmented Reality environment.

XXXV Cycle: 2019 – 2022

Ph.D Candidate:

Zhen Li

Advisors:

Elena De Momi, Jenny Dankelman

Ph.D cycle:

XXXV

Collaborations:

TU Delft

Path planning and real-time re-planning

Given the deformable nature of the surroundings, RT planning and control is needed in order to guarantee that a flexible robot reaches a target site with a certain desired pose. ESR 13 will implement an accurate kinematic and dynamic model of the flexible robot incorporating knowledge on the robot limitations right in the planning algorithm so that the best paths are executed pre-operatively, considering the constraints on allowable paths, the location of the anatomic target and intra-operatively including the uncertainties in the adopted (and identified) flexible robot model and of the collected sensor readings (ESR5).

Advanced exploration approaches will be adapted to each specific clinical scenario, its constraints as well as the robot constraints such as its manipulability. Specific clinically relevant optimality criteria will be identified and integrated. This methods could try to keep away sharp parts of the instrument (e.g. tip) from lumen edges. RT capabilities will grant the possibility to re-plan the path during the actual operation.

Ph.D Candidate:

Jorge Lazo

Advisors:

Elena De Momi

Ph.D cycle:

XXXV

Collaborations:

ATLAS

Computer Vision and Machine Learning for Tissue Segmentation and Localization

The ATLAS project will develop smart flexible robots that autonomously propel through complex deformable tubular structures. This calls for seamless integration of sensors, actuators, modelling and control.

While contributing to the state of the art, the candidates will become proficient in building, modelling, testing, interfacing in short in integrating basic building blocks into systems that display sophisticated behavior.

Each flexible robot will be equipped with extero- and proprioceptive sensors (such as FBG) in order to have information on position and orientation, as well as on-board miniaturized cameras. Additionally, RT image acquisition will be performed using US sensors externally placed in contact with the patient outer body. In order to track the position of the flexible robot and to simultaneously identify the environment conditions RT US image algorithms will be developed. Deep learning approaches combining Convolutional Neural Networks (CNNs) and automatic classification methods (e.g. SVM) will extract characteristic features from the images to automatically detect the:

1) Flexible robot shape

2) The hollow lumen edges positions (to be integrated with ESR5)

3) Information on surrounding soft tissue shape and location

RT performance will be achieved by parallel optimization loops.

Ph.D Candidate:

Luca Fortini

Advisors:

Elena De Momi, Arash Ajoudani

Ph.D cycle:

XXXV

Collaborations

IIT

Online Robotic and Feedback Strategies for Ergonomic Improvement in Industry

Today manufacturing industries are faced with an increasingly competitive market. Production lines must be flexible and less structured to easily adapt to new workflows. As a result, industrial workers may be exposed to greater danger in terms of posture and load increasing the already dramatic statistics on Musculoskeletal Disorders (MSDs). My PhD project aims at improving the online monitoring of human body status to prevent wrong behaviours through the adoption of countermeasures either passive (feedback informative systems) and/or active (cobots, exoskeletons). To guarantee a continuous monitoring the subject is equipped with a set of sensors whose outputs are prerequisites for the estimation of custom ergonomic indexes. Depending on the task performed, a multi-objective ergonomic-based optimisation is run to establish the most suitable way of executing the duty. The outcome is then communicated to the feedback interface and/or to the robotic counterpart.

Ph.D Candidate:

Luca Sestini

Advisors:

Giancarlo Ferrigno, Nicolas Padoy

Ph.D cycle:

XXXV

Collaborations

ATLAS

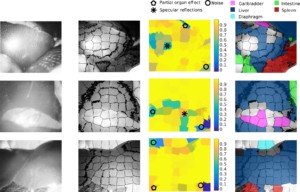

Image-Based Tool-Tissue Interaction Estimation

One of the keys to bring situational awareness to surgical robotics is the automatic recognition of the surgical workflow within the operating room (OR). Indeed, human-machine collaboration requires the understanding of the activities taking place both outside the patient and inside the patient.

This project will focus on the modelling and recognition of tool-tissue interactions during surgery to produce human–understandable information to be exploited during procedures inside the OR. The project will involve:

1)Developing a model to represent the actions performed by the endoscopic tools on the anatomy (e.g. through triplets: tool, action performed, anatomy acted upon)

2)Developing and training a deep-learning model to link the procedural knowledge describing the surgery to digital signals (such as endoscopic videos)

3)Exploiting robot model and kinematic information in order to limit the need for manual annotations for model training.

Ph.D Candidate:

Chun-Feng Lai

Advisors:

Elena De Momi, Jenny Dankelman

Ph.D cycle:

XXXV

Collaborations:

ATLAS

Distributed follow-the leader control for minimizing tissue forces during soft-robotic endoscopic locomotion through fragile tubular environments

Using prior experience on developing snake-like instruments for skull base surgery, the PhD candidate will elaborate these mechanical concepts into advanced soft-robotic endoscopes able to propel themselves forward though fragile tubular anatomic environments to hard-to-reach locations in the body. The ESR has two main goals:

• to use advanced 3D-printing to create novel snake-like endoscopic frame-structures that can be easily printed in one printing step without need for assembly, and that easily integrate cameras, actuators, biopsy channels and glass fibres. FEM-simulations to optimize a) shapes that are easy to bend yet hard to twist and compress (e.g. by using helical shapes), b) minimal distributions of actuators enabling complex and precisely controlled motion.

• to develop follow-the-leader locomotion schemes for moving through fragile tubular environments (e.g. colon or ureter) and evaluate this ex-vivo in anatomic tissue phantoms.

XXXIV Cycle: 2018 – 2021

Ph.D Candidate:

Alessandro Casella

Advisors:

Elena De Momi, Leonardo De Mattos, Sara Moccia

Ph.D cycle:

XXXIV

Collaborations:

IIT

Computer Vision Technologies for Computer-Assisted Fetal Therapies

Twin-to-twin transfusion syndrome (TTTS) may occur, during identical twin pregnancies, when abnormal vascular anastomoses in the monochorionic pla-centa result in uneven blood flow between the fetuses. If not treated, the risk of perinatal mortality of one or both fetuses can exceed 90%. To recover the blood flow balance, the most effective treatment is minimally invasive laser surgery in fetoscopy. Limited field of view (FoV), poor visibility, fetuses’ movements, high illumination variability and limited maneuverability of the fetoscope directly impact on the complexity of the surgery. In the last decade, the medical field has seen a dramatic revolution thanks to the advances in surgical data science analysis such as Artificial Intelligence (AI) and in particular Deep Learning (DL). However, few efforts have been spent in fetal surgery applications, mainly to the reduced availability of datasets and the complexities of fetoscopic videos, which are of low resolution, and lack in texture and color contrast.

Considering these challenges and limitations in the state of the art, this PhD project focuses on the exploration of deep learning techniques for the development of tools for computer-assisted fetal surgery.

Find Us

NEARLab is located inside the Leonardo Robotics Labs space at Politecnico di Milano, piazza Leonardo da Vinci 32, Building 7, 20133, Milano, Italy

and at Campus Colombo in Via Giuseppe Colombo, 40, 20133 Milano MI

Hours

Monday to Friday: 8.00 A.M. – 20.00 P.M.

More

Website Maintainers

Alberto Rota, Mattia Magro, Alessandra Maria Trapani

Search

Get in touch

or visit the Research Areas and contact the corresponding team directly

Connections

Materials